Mar 7, 2025

PyFlow.ts: Bridging ML Research with Production Using a Single Decorator

Discover how PyFlow.ts eliminates the "last mile" problem in ML deployment by connecting Python ML models to TypeScript frontends with minimal code.

Marius Constantin-Dinu

Share this article

Integrating ML Research with Production: The PyFlow.ts Approach

In the world of machine learning and AI, there's a familiar pain point that haunts both researchers and engineers: the transition from experimental code to production-ready applications. As an ML researcher, I've spent countless hours developing elegant algorithms and models, only to face the frustrating reality of having to rewrite everything into a web-friendly format, complete with API layers, type definitions, and client-side code.

This "last mile" problem has been a persistent thorn in our industry's side. That's why I created PyFlow.ts, a lightweight bridge between Python and TypeScript that eliminates the friction and boilerplate code typically required to connect ML models to frontend applications.

The ML Deployment Problem

Let me paint a familiar picture:

You've spent weeks training a sophisticated machine learning model. It works beautifully in your Jupyter notebook. The results are promising, and you're excited to deploy it as part of a web application. Then reality hits:

API Development: Writing a REST API with proper endpoints, request/response models, and error handling

Type Safety: Manually translating Python types to TypeScript interfaces

Client Generation: Creating client-side code that communicates with your API

Documentation: Keeping API specs and clients in sync

Maintenance: Managing changes as your model evolves

For many data scientists and ML engineers, this process is not just tedious—it's a complete context switch from the creative modeling work they excel at. Each of these steps requires specialized knowledge and adds days or weeks to the development cycle.

Small development teams are hit especially hard. They often lack dedicated API specialists and frontend developers who can bridge this gap efficiently.

Enter PyFlow.ts: Bridging the Python-TypeScript Divide

PyFlow.ts is designed to solve exactly this problem through a radically simple approach: just add a decorator.

Here's a simple example:

That's it. No API routes, no serialization code, no OpenAPI specs to maintain. Just Python code with type annotations and a simple decorator.

On the TypeScript side, you simply use the generated code:

Behind the scenes, PyFlow.ts:

Inspects your Python code and type annotations

Generates a FastAPI backend server

Creates TypeScript type definitions and client code

Handles serialization, API communication, and type conversion automatically

Beyond Basic Integration: Advanced Features

While the simple decorator pattern is powerful on its own, PyFlow.ts offers advanced features that make it suitable for complex applications:

1. Custom Type Mappings

PyFlow.ts automatically maps Python types to TypeScript types. Common mappings include:

Python Type | TypeScript Type |

|---|---|

|

|

|

|

|

|

|

|

|

|

| Array or typed tuple |

|

|

|

|

Classes | Classes with the same name |

You can also define custom classes with type annotations that are automatically converted to TypeScript classes:

This generates properly defined TypeScript interfaces:

2. Class Inheritance

PyFlow.ts respects Python class inheritance and generates the appropriate TypeScript classes:

3. Directory Scanning

For larger projects with multiple Python modules, PyFlow.ts can recursively scan directories:

This is particularly useful for maintaining organization in larger ML projects while still having a unified API.

Why This Matters for ML Teams

Traditional approaches to connecting Python ML models to TypeScript frontends involve:

REST APIs: Developing a FastAPI/Flask/Django API, creating OpenAPI specs, and generating clients

gRPC: Writing protocol buffers, generating stubs, and handling streaming

WebSocket solutions: Building real-time communication layers

Custom solutions: Creating bespoke integration points

Each of these approaches requires significant engineering effort—often days to weeks of work—and specialized knowledge that many data scientists and ML researchers don't have.

PyFlow.ts collapses this work into minutes. Add decorators, run a command, and you're done.

Real-World Applications of PyFlow.ts

The power of PyFlow.ts truly shines when applied to real-world ML problems. Let's explore some common use cases:

1. Computer Vision Applications

ML researchers working on computer vision can rapidly build web interfaces for their models:

With this simple implementation, frontend developers can immediately build interactive UIs like:

Drag-and-drop image analysis tools

Live webcam object detection

Video processing dashboards

2. Natural Language Processing Pipelines

NLP researchers can expose complex language processing pipelines to web applications:

3. Financial Analysis and Algorithmic Trading

For FinTech applications, PyFlow.ts bridges the gap between complex financial models in Python and interactive trading interfaces:

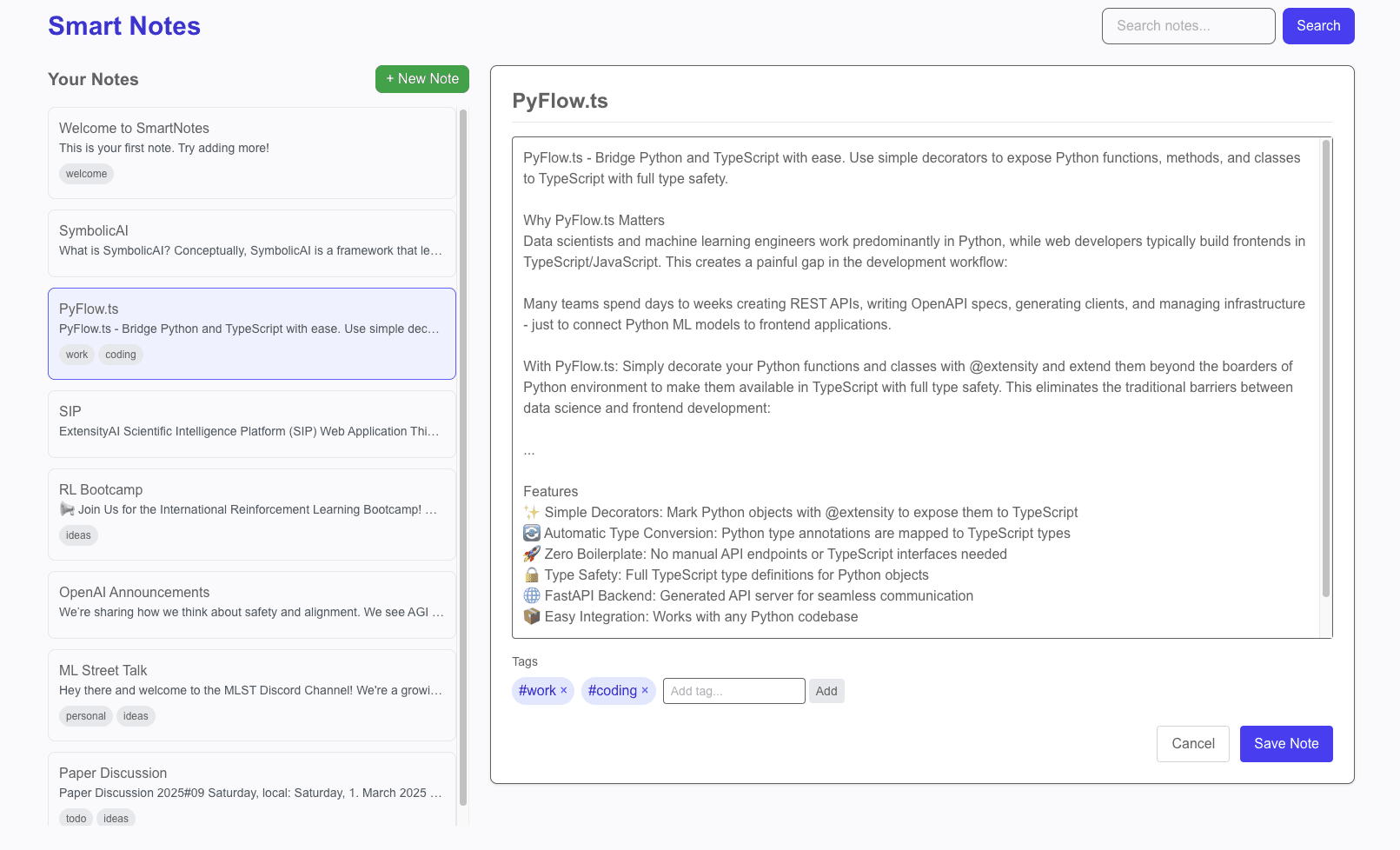

Case Study: Building a Smart Notes App

To demonstrate the full power of PyFlow.ts, let's build a complete application: a smart notes app with AI-powered suggestions. This example showcases how seamlessly ML features can be integrated into a web application.

The full app is available here.

Here's what our app will include:

A smart notes app that provides ML-powered suggestions based on your notes

Automatic categorization and tagging

Semantic search capabilities

Let's start with our Python backend:

Now, let's initialize PyFlow.ts to generate our TypeScript code. If you use our tutorial code you can do the following:

With this single command, PyFlow.ts generated all the TypeScript interfaces, API client code, and backend server code we need. Now we can create our Next.js frontend that leverages these ML capabilities:

Our note editor component can directly use the ML services for tag suggestions and content continuation:

Performance Considerations and Best Practices

As you build more complex applications with PyFlow.ts, keep these performance considerations and best practices in mind:

1. Structure Your Python Code for Clarity

Organize your ML code into cohesive classes with clear responsibilities:

2. Use Type Annotations Extensively

The quality of your TypeScript classes depends on your Python type annotations:

3. Optimize Data Transfer

Remember that all function calls between TypeScript and Python happen over HTTP. For large data transfers:

Use batching for multiple operations when possible

Consider binary formats for large datasets

Use compression for text data

For example:

4. Leverage State Management

PyFlow.ts automatically maintains state for class instances, allowing you to build stateful applications easily:

5. Handle Errors Gracefully

Make sure your Python code handles errors properly, as they'll be transmitted to the TypeScript side:

PyFlow.ts vs Traditional Approaches

When comparing PyFlow.ts to traditional integration approaches, the benefits become clear:

Feature | PyFlow.ts | REST API | gRPC | Custom Solutions |

|---|---|---|---|---|

Development Time | Minutes | Days to Weeks | Days to Weeks | Weeks to Months |

Code Generation | Automatic | Manual/OpenAPI | Proto Buffers | Custom |

Type Safety | Full | Partial | Full | Varies |

Boilerplate Code | Minimal | Extensive | Moderate | Extensive |

Learning Curve | Low | Moderate | High | High |

API Documentation | Auto-generated | Manual/OpenAPI | Proto Files | Custom |

State Management | Built-in | Manual | Manual | Varies |

Code Maintenance | Low | High | Moderate | High |

Looking to the Future: Roadmap and Development

While PyFlow.ts already solves many problems, if you like and support our work we have concepts already mapped out for expanding its capabilities:

WebSocket Support: For more efficient bidirectional communication

Batch Processing API: Optimized for high-throughput workloads

Edge Deployment Options: Including potential WASM compilation for certain use cases

Enhanced Type Mapping: Supporting more complex Python types and generics

Vision: Model-Driven Development with LLMs

Perhaps the most exciting potential is in model-driven development with large language models (LLMs) and vision language models (VLMs).

As we increasingly use AI to generate code, the simpler our frameworks are, the more likely the AI will generate correct code. With PyFlow.ts, an LLM only needs to generate the core Python and TypeScript logic, not all the complex API and integration layers.

This drastically increases the likelihood that LLM-generated code will work on the first try, enabling entire applications to be fully generated and raised from prompt to production with minimal human intervention.

Getting Started with PyFlow.ts

Ready to try PyFlow.ts on your own projects? It's as simple as:

Then decorate your Python functions and classes:

Generate the TypeScript code:

And use it in your frontend:

PyFlow.ts is open source and available on GitHub: ExtensityAI/PyFlow.ts

Join the ExtensityAI Community

One of the most exciting aspects of PyFlow.ts is the growing community of ML practitioners and web developers collaborating more effectively.

Here's how you can get involved:

Star the GitHub repository: Help others discover PyFlow.ts

Share your use cases: We're collecting examples of PyFlow.ts in production

Contribute: Whether it's documentation, bug fixes, or new features

Join our Discord: Connect with other developers using PyFlow.ts

Conclusion

The "last mile" problem in ML deployment has been a persistent friction point that has slowed innovation and limited the impact of AI research. With PyFlow.ts, I wanted to create a lightweight solution that would eliminate this friction entirely.

By allowing Python code to be directly exposed to TypeScript with full type safety, PyFlow.ts bridges the gap between research and production, enabling ML teams to focus on what they do best: creating amazing models and delivering value to users.

Whether you're a solo ML researcher looking to showcase your work, a startup trying to ship your first ML-powered product, or an enterprise team bridging the gap between research and production, PyFlow.ts can help you focus on what matters—creating value with machine learning—rather than the infrastructure that connects it all together.

Give it a try, and let me know what you build with it!

Marius-Constantin Dinu is the creator of PyFlow.ts and co-founder of ExtensityAI. You can find me on Twitter, LinkedIn, or at my personal website.